Introduction

Cloud datacenter has become the most important IT infrastructure that people use every day. Building efficient future datacenters will require collective efforts of the entire global community. As an attempt to initiate a platform that will bring together the most important and forward-looking work in the area for intriguing and productive discussions, the 4th Workshop on Hot Topics on Data Centers (HotDC 2019) will be held in Beijing, China on December 19th, 2019.

HotDC 2019 consists of by-invitation-only presentations from top academic and industrial groups around the world. The topics include a wide range of datacenter related issues, including the state-of-the-art technologies for server architecture, storage system, datacenter network, resource management, etc. Besides, HotDC 2019 provides a special session including invited talks presenting recent research works from the datacenter team in Institute of Computing Technology, Chinese Academy of Sciences.

The HotDC workshop expects to provide a forum for the cutting edge in datacenter research, where researchers/engineers can exchange ideas and engage in discussions with their colleagues around the world. Welcome to HotDC 2019!

Sponsors

Organizing Committee

General Chair

Yungang Bao, Institute of Computing Technology, Chinese Academy of Sciences

TPC Chair

Ke Liu, Institute of Computing Technology, Chinese Academy of Sciences

Publicity Committee

Zhiwei Lai, Institute of Computing Technology, Chinese Academy of Sciences

Xiaoyue Qiu, Institute of Computing Technology, Chinese Academy of Sciences

Linrong Xie, Institute of Computing Technology, Chinese Academy of Sciences

Jing Guo, Institute of Computing Technology, Chinese Academy of Sciences

Organization Committee

Dejun Jiang, Institute of Computing Technology, Chinese Academy of Sciences

Qun Huang, Institute of Computing Technology, Chinese Academy of Sciences

Sa Wang, Institute of Computing Technology, Chinese Academy of Sciences

Wenkai Wan, Institute of Computing Technology, Chinese Academy of Sciences

Wenya Hu, Institute of Computing Technology, Chinese Academy of Sciences

Biwei Xie, Institute of Computing Technology, Chinese Academy of Sciences

Yuqing Zhu, Institute of Computing Technology, Chinese Academy of Sciences

Workshop Schedule

Conference Venue: Academic Hall, UCAS, Yanqi Lake Campus (Huairou) conference center, Beijing

Date: December 19th, 2019

| 08:50 - 09:00 | Opening remark |

| Keynote Session 1: Datacenter System and Networking, Chair: Ke Liu | |

| 09:00 - 09:40 | Topic: Datacenter Networking: A 10-Year Research Retrospection Speaker: Kai Chen |

| 09:40 - 10:20 | Topic: When Serverless Means No Server Speaker: Yiying Zhang |

| 10:20 - 10:40 | Coffee break |

| Keynote Session 2: Datacenter Monitor and Diagnosis, Chair: Sa Wang | |

| 10:40 - 11:20 | Topic: Understanding and Finding Timing Bugs in Cloud Systems Speaker: Shan Lu |

| 11:20 - 12:00 | Topic: Automating Failure Diagnosis for Distributed Systems Speaker: Yongle Zhang |

| 12:00 - 12:50 | Lunch |

| Keynote Session 3: Datacenter Storage, Chair: Dejun Jiang | |

| 12:50 - 13:30 | Topic: Secure Persistent Memory Systems: Hardware Software Co-design Speaker: Yu Hua |

| 13:30 - 14:10 | Topic: Cloud Storage Systems: Latency Analysis and Caching Strategies Speaker: Vaneet Aggarwal |

| 14:10 - 14:30 | Coffee break |

| Keynote Session 4: Datacenter Hardware, Chair: Yuqing Zhu | |

| 14:30 - 15:10 | Topic: Architecture Directions for Data Centre Server Processors Speaker: G S Madhusudan |

| 15:10 - 15:50 | Topic: Time and Resource-Efficient Performance and Power Simulation for Building Next-Generation Processors Speaker: Trevor E. Carlson |

| 15:50 - 16:10 | Coffee break |

| Keynote Session 5: Datacenter Application and Security, Chair: Yuhang Liu | |

| 16:10 - 16:50 | Topic: Lessons from Building A Stream Processing Engine from the Ground Up Speaker: Felix Xiaozhu Lin |

| 16:50 - 17:30 | Topic: Privacy Threats in Edge-Cloud Artificial Intelligent Systems Speaker: Tianwei Zhang |

| Poster Session, Chair: Qun Huang | |

| 17:30 - 18:30 | Venue: The lobby outside the Academic Hall |

Keynote Speakers

| Topic: Datacenter Networking: A 10-Year Research Retrospection Speaker: Kai Chen Abstract: Datacentres are the main infrastructures for big data, machine learning, and cloud systems. In the past 10 years, we have been researching on datacenter networking along multiple dimensions, including network architecture, routing, load balancing, congestion control, flow scheduling, etc., using different approaches from traditional expert heuristics to modern machine-generated intelligences. In this talk, I will introduce a Lab-scale datacenter cluster we have built in HKUST and overview some research projects we developed on top of it. In particular, I will focus on how we found and defined these critical research problems, the challenges we encountered, and the tradeoffs we made to address them. Some of these system designs have been adopted in the industry. Bio: Kai Chen is an Associate Professor of Hong Kong University of Science and Technology (HKUST), the Director of System Networking Lab (SING Lab) and WeChat-HKUST joint Lab for Artificial Intelligence Technology (WHAT Lab), and the Executive Vice-President of Hong Kong Society of Artificial Intelligence & Robotics (HKSAIR). He received his BS/MS from University of Science and Technology of China (USTC) in 2004/2007, and PhD from Northwestern University in 2012. His areas of interest include data center networking, machine learning systems, and privacy-preserving AI infrastructure. His work has been published in various top venues such as SIGCOMM, NSDI and TON. He is the steering committee co-chair of APNet, and serves on program committee of SIGCOMM, NSDI, INFOCOM, etc., and editorial board of IEEE/ACM Transactions on Networking, Big Data, and Cloud Computing. |

| Topic: When Serverless Means No Server Speaker: Yiying Zhang Abstract: Datacenters have been using the “monolithic” server model for decades, where each server hosts a set of hardware devices like CPU and DRAM on a motherboard and runs an OS on top to manage the hardware resources. In recent years, cloud providers offer a type of service called “serverless computing” in response to cloud users’ desire for not managing servers/VMs/containers. Serverless computing quickly gained popularity, but today’s serverless computing still runs on datacenter servers. The monolithic server model is not the best fit for serverless computing and it fundamentally restricts datacenters from achieving efficient resource packing, hardware rightsizing, and great heterogeneity. Bio: Yiying Zhang is an assistant professor in the Computer Science and Engineering Department at University of California, San Diego. She works in the broad field of datacenter research. Her lab builds new OSes, hardware, distributed systems, and networking solutions for next-generation datacenters. She won an OSDI best paper award in 2018, a SYSTOR best paper award in 2019, and an NSF CAREER award in 2019. Yiying received her Ph.D. from the Department of Computer Sciences at the University of Wisconsin-Madison. Before joining UCSD in fall 2019, she was an assistant professor at Purdue ECE for four years. |

| Topic: Understanding and Finding Timing Bugs in Cloud Systems Speaker: Shan Lu Abstract: Non-deterministic timing bugs are among the most difficult types of bugs to avoid and detect. They take on new forms and impose increasing threats in large scale cloud systems. This talk will first present our effort in understanding timing bugs in open-source and commercial cloud systems. It will then discuss a series of models and techniques that we build to detect message timing and fault timing bugs in open-source cloud systems. Finally, it will present our recent collaboration with Microsoft Research where a novel fault injection technique is used to greatly improve the testing procedure of cloud software, with more than 1000 timing bugs discovered in Microsoft Azure system. This series of work has been published at ASPLOS 2016, ASPLPS 2017, ASPLOS 2018, HotOS 2019, and SOSP 2019 (Best Paper). Bio: Shan Lu is a Professor in the Department of Computer Science at the University of Chicago. She received her Ph.D. from University of Illinois, Urbana-Champaign, in 2008. Her research focuses on software reliability and efficiency. Shan is an ACM Distinguished Member (2019), an Alfred P. Sloan Research Fellow (2014), a Distinguished Educator Alumnus from Department of Computer Science at University of Illinois (2013), and a NSF Career Award recipient (2010). Her co-authored papers won Google Scholar Classic Paper 2017, Best Paper Awards at SOSP 2019, OSDI 2016 and FAST 2013, ACM-SIGSOFT Distinguished Paper Awards at ICSE 2019, ICSE 2015 and FSE 2014, an ACM-SIGPLAN Research Highlight Award at PLDI 2011, and an IEEE Micro Top Picks in ASPLOS 2006. Shan currently serves as the Chair of ACM-SIGOPS and the technical program co-chair for 2020 USENIX Symposium on Operating Systems Design and Implementation (OSDI'20). |

| Topic: Automating Failure Diagnosis for Distributed Systems Speaker: Yongle Zhang Abstract: Distributed software systems have become the backbone of Internet services. Failures in production distributed systems have severe consequences. A 30-minute outage of Amazon in 2013 caused a two million dollar loss in revenue. Moreover, the frequency of failures rises with the increasing complexity of software systems. 2019 has experienced noticeably more Internet outages and is considered the “year of outages”. Bio: Yongle Zhang is a PhD student in the Department of Electrical and Computer Engineering, University of Toronto, working with Prof. Ding Yuan. His research interest is automated failure diagnosis for software systems. He has published papers on failure diagnosis in SOSP and OSDI. |

| Topic: Secure Persistent Memory Systems: Hardware Software Co-design Speaker: Yu Hua Abstract: Due to the salient features of high density and near-zero standby power, non-volatile memories (NVMs) are promising candidates for the next-generation main memory. NVMs suffer from security vulnerability to physical access attacks due to non-volatility. Memory encryption can mitigate the security vulnerabilities, which however increases the number of bits written to NVMs due to the diffusion property and thereby aggravates the NVM wear-out. Due to high security level and low decryption latency, counter mode encryption is often used and however incurs new persistence problem for crash consistency guarantee due to the requirement for atomically persisting both data and its counter. To address these problems, existing work relies on a large battery backup or complex modifications on both hardware and software layers via a write-back counter cache, which are inefficient in real-world systems. Our recent schemes propose to use application-transparent and deduplication-aware secure persistent memory to guarantee the atomicity of data and counter writes without the needs of battery backup and software-layer modifications. To further support multi-reader and multi-writer concurrency, we use a write-optimized and high-performance hashing index scheme with low-overhead consistency guarantee and cost-efficient resizing. Bio: Yu Hua is a Professor in Huazhong University of Science and Technology. He was Postdoc Research Fellow in University of Nebraska-Lincoln in 2010-2011. He obtained his B.E and Ph.D degrees from Wuhan University, respectively in 2001 and 2005. His research interests include cloud storage systems, non-volatile memory, big data analytics, etc. His papers have been published in major conferences and journals, including OSDI, FAST, MICRO, USENIX ATC, ACM SoCC, SC, HPDC, DAC. He serves for multiple international conferences, including FAST, ASPLOS, SOSP, ISCA, USENIX ATC, SC, SoCC. He is the distinguished member of CCF, senior member of ACM and IEEE, and the member of ACM SIGOPS and USENIX. He has been appointed as the Distinguished Speaker of ACM and CCF. |

| Topic: Cloud Storage Systems: Latency Analysis and Caching Strategies Speaker: Vaneet Aggarwal Abstract: Consumers are engaged in more social networking and E-commerce activities these days and are increasingly storing their documents and media in the online storage. Businesses are relying on Big Data analytics for business intelligence and are migrating their traditional IT infrastructure to the cloud. These trends cause the online data storage demand to rise faster than Moore’s Law. Erasure coding techniques are used widely for distributed data storage since they provide space-optimal data redundancy to protect against data loss. Cost-effective, network-accessible storage is a strategic infrastructural capability that can serve many businesses. These customers, however, have very diverse requirements of latency, cost, security etc. In this talk, I will describe how to characterize latency. In order to characterize latency, we give and analyze a novel scheduling algorithm. Further, I will present a novel concept of functional caching in storage systems and describe its advantages as compared to duplication in current caching systems. We will validate the results for optimizing a tradeoff between latency and cost using implementations on an open source distributed file system on a public test grid. Bio: Vaneet Aggarwal received the B.Tech. degree in 2005 from the Indian Institute of Technology, Kanpur, India, and the M.A. and Ph.D. degrees in 2007 and 2010, respectively from Princeton University, Princeton, NJ, USA, all in Electrical Engineering. He is currently an Associate Professor in the School of IE and ECE (by courtesy) at Purdue University, West Lafayette, IN, where he has been since Jan 2015. He was a Senior Member of Technical Staff Research at AT&T Labs-Research, NJ (2010-2014), Adjunct Assistant Professor at Columbia University, NY (2013-2014), and VAJRA Adjunct Professor at IISc Bangalore (2018-2019). His current research interests are in communications and networking, cloud computing, and machine learning. Dr. Aggarwal received Princeton University's Porter Ogden Jacobus Honorific Fellowship in 2009, the AT&T Vice President Excellence Award in 2012, the AT&T Key Contributor Award in 2013, the AT&T Senior Vice President Excellence Award in 2014, the 2017 Jack Neubauer Memorial Award recognizing the Best Systems Paper published in the IEEE Transactions on Vehicular Technology, and the 2018 Infocom Workshop HotPOST Best Paper Award. He is on the Editorial Board of the IEEE Transactions on Communications, the IEEE Transactions on Green Communications and Networking, and the IEEE/ACM Transactions on Networking. |

| Topic: Architecture Directions for Data Centre Server Processors Speaker: G S Madhusudan Abstract: Current server processor architectures are a compromise that attempts to cater to a broad range of workloads Recent trends of adding accelerators ameliorates this compromise a little but does not go far enough in rethinking processors, especially for data centre workloads. Server processors need differentiated cores for running kernel and virtualized user workloads, light weight compartments for security (including extending security across sockets), TM based LS pipelines for memory hierarchies with large variations in memory latency and core to core message passing mechanisms. Bio: G S Madhusudan is the CEO and co-founder of InCore Semiconductors, India’s first processor IP company. He is also one of the coordinators of the IIT Madras Shakti RISCV project and a collaborator with the Robert Bosch Centre for Data Sciences and AI. He is a veteran of the electronics and computing technology industry with more than 3 decades of experience in running tech startups and R&D organizations across the world. He is an advisor to various govt departments and was a member of the GOI AI task force. |

| Topic: Time and Resource-Efficient Performance and Power Simulation for Building Next-Generation Processors Speaker: Trevor E. Carlson Abstract: Driven by the demand for higher overall performance in general-purpose processing, fast simulation of next-generation designs is critical. Architects need to understand the potential performance benefits of new techniques as well as the energy- and power-trade-offs that need to be made to accelerate general-purpose workloads. This becomes increasingly important as datacenters continue to grow size, IoT devices proliferate, and as designers consider open-source solutions as an alternative to commercial ones. The number of potential architects is increasing overall, but their tools have-not been keeping pace. Bio: Trevor E. Carlson is an assistant professor at the National University of Singapore (NUS). He received his B.S. and M.S. degrees from Carnegie Mellon University in 2002 and 2003, his Ph.D. from Ghent University in 2014, and has worked for 3 years as a postdoctoral researcher at Uppsala University in Sweden until 2017. He has also spent a number of years working in or with industry, at IBM from 2003 to 2007, at the imec research lab from 2007 to 2009, and with the Intel ExaScience Lab from 2009 to 2014. Overall, he has over 16 years of computer systems and architecture experience in both industry and academia. |

| Topic: Lessons from Building A Stream Processing Engine from the Ground Up Speaker: Felix Xiaozhu Lin Abstract: This talk overviews StreamBox, our research stream processing engine designed and optimized for single commodity servers. Started in 2016, StreamBox was incubated to answer one question - to what extent we can push the performance limit of stream processing on a single machine? Over the years, we have built three versions of StreamBox and accordingly explored three ideas: to exploit multicore for high parallelism, to exploit 3D-stacked DRAM for efficient data move, and to exploit hardware enclaves for strong security guarantees. I will share pitfalls we ran into as well valuable lessons we learned. Bio: Felix Xiaozhu Lin is an assistant professor of Purdue ECE. He received his PhD in CS from Rice and BS/MS from Tsinghua. At Purdue, he leads the Xroads systems exploration lab (XSEL) to accelerate and safeguard important computing scenarios. He and his students measure and build systems software with diverse techniques, including novel OS structures, kernel subsystem design, binary translation, and user-level runtimes. See http://felixlin.org for more information. |

| Topic: Privacy Threats in Edge-Cloud Artificial Intelligent Systems Speaker: Tianwei Zhang Abstract: Past few years have witnessed the fast development of deep learning technology. The proliferation of Internet of Things calls for deep learning applications running on edge devices. Due to the limited computation resources and battery capacity of edge devices, a promising strategy is to offload part of the computation from local devices to remote cloud servers. Such edge-cloud collaborative system can significantly improve performance and reduce energy consumption of AI applications. However, the interactions between edge devices and cloud servers can also bring privacy risks to the deep learning assets (data, models, etc.). In this talk, I will present potential privacy attacks against edge-cloud collaborative systems. I will introduce several techniques which enable an untrusted service provider to fully recover sensitive inference data. I will also discuss possible defense solutions to make the artificial intelligent systems more trustworthy and efficient. Bio: Dr. Tianwei Zhang is currently an assistant professor of School of Computer Science and Engineering, at Nanyang Technological University. Before joining NTU, he worked at Amazon as a software engineer from 2017 – 2019. He received his Bachelor’s degree in physics at Peking University, China, in 2011, and the Ph.D degree in Electrical Engineering at Princeton University in 2017. His research focuses on computer system security. He is particularly interested in distributed system security, computer architecture security, and machine learning security. |

Call For Student Posters

This year HotDC has a student poster session for students showcase their research works in an informal setting. The poster session takes a broad view of topics of interest, which includes new ideas relating (but not limited) to data centers and cloud computing.

We strongly encourage every student who are attending HotDC to prepare a poster. The registration fee of HotDC can be waived for students preparing the posters. The HotDC 2019 committee will review all posters, and the best poster will be voted by the committee. Students do not need to submit anything, only need to bring your posters and present the posters during the poster session of HotDC.

if you are willing to present your work in the poster session, please send your name, affiliations and poster topic to hotdc@ict.ac.cn, and bring your poster to the conference venue on 19th Dec.

Expected Poster formation

A poster is A0 paper size in portrait mode (841 x 1189mm), to which you can affix visually appealing material that describes your research topic/direction. You should prepare the best material (visually appealing and succinct) that effectively communicates your targeted problem, why it is important, what is the state-of-the-art, what is wrong/missing, your insight, initial design, preliminary results (if any), and why it is novel.

Registration

注册费用

注册费:人民币1000元/人

学生注册费:人民币400元/人

注意:报名poster session的学生不需要注册费,并请将您的姓名、单位、海报主题发送到 hotdc@ict.ac.cn ,且于12月19日将海报带至会议现场。

付款方式

1. 银行汇款:(推荐付款方式,以便及时为您开具发票)

单位:中国科学院计算技术研究所

账号:0200004509088123135

开户行:工行北京市分行海淀西区支行

汇款时请务必在附言栏标明:HOTDC2019及注册人员姓名

说明:如选择银行汇款,请务必将汇款回单及会议注册费回执单发送邮件至hotdc@ict.ac.cn

(汇款回单是您的缴费凭证,会议注册回执单将用于开具发票)

2. 现场缴费:

可通过现场微信付款,现场填写会议注册费回执单。

Contacts

Ke Liu

WeChat group:

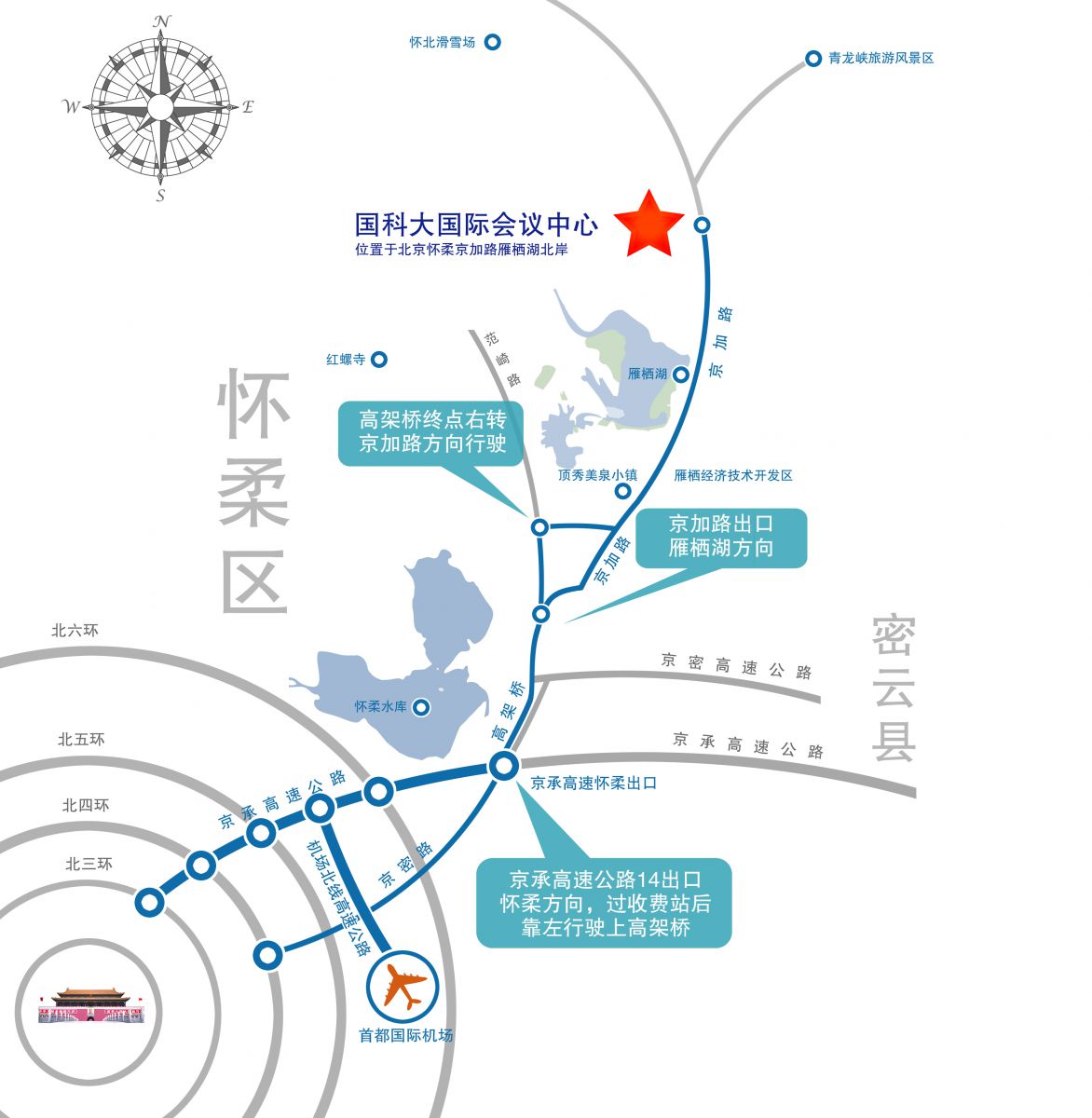

Location

北京中国科学院大学国际会议中心(北京怀柔区雁栖湖怀北庄村)

如果您需要我们协助预订国科大国际会议中心的房间,您可以将以下信息发送邮件至hotdc@ict.ac.cn:

1.入住人姓名、单位

2.入住房型、入住时间、退房时间、是否需要早餐

请在邮件主题中注明“hotdc房间预定”

酒店房型信息:

单人间:360元/晚,含早餐420元/晚

双人间:390元/晚,含早餐450元/晚

(均为会议优惠价)